There’s been a tension in physics over the last century or so between two theories. Both have proven valuable for predicting the behavior of the universe, as well as for advancing technological engineering, but they seem to make completely incompatible claims about the nature of reality.

I’m referring, of course, to the general theory of relativity and quantum theory. Ordinarily, these two theories tackle very different questions about the universe — one at the largest scale and the other at the smallest — but both theories come together in the study of black holes, points of space from which no information can escape.

There’s a tension in the air at many enterprise organizations today as well between two heuristics for enterprise networking, both of which have produced excellent results for software companies for years. That tension revolves around Kubernetes. As a manager of cloud security and network infrastructure for a large regional bank put it, “Kubernetes ends up being this black hole of networking.”

The analogy is apt. Like black holes, Kubernetes abstracts away much of the information traditionally used to understand and control networks. Like quantum theory, Kubernetes offers a new way to think about your network, but this new way of thinking is generally incompatible with existing network tools, as well as with applications that don’t run on Kubernetes. But what makes Kubernetes so challenging for existing networks?

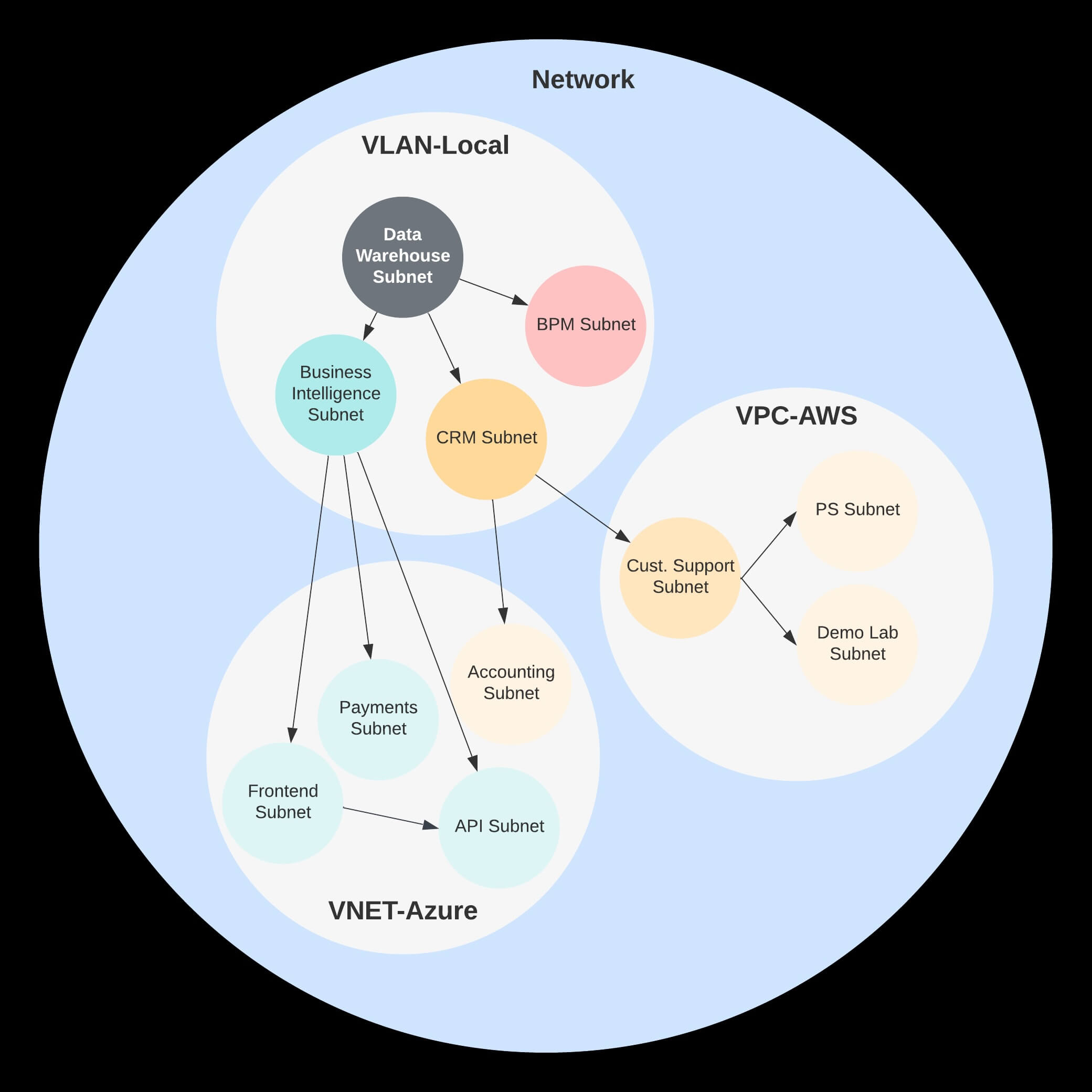

Traditionally, network engineering has always been about perimeters — hierarchical lines drawn around sets of addresses. Each perimeter describes a set of rules for traffic that would cross the perimeter and includes an enforcement mechanism such as a firewall or router.

Zoom

Figure 1: Traditional networks use virtual local area network (VLAN) and subnet perimeters for security.

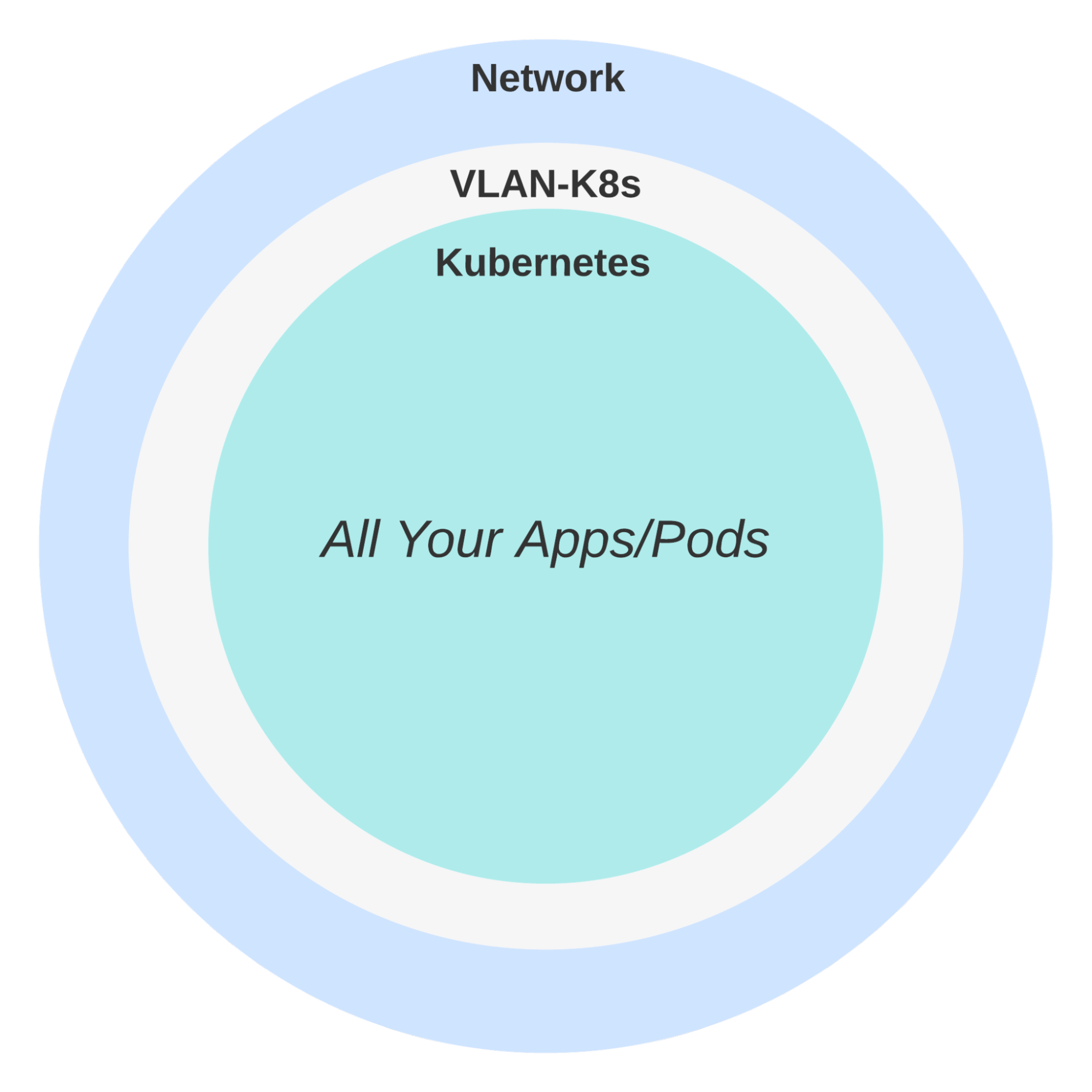

Technically, Kubernetes operates within the boundaries of the perimeter network model. But a cluster, often consisting of hundreds or thousands of applications, occupies just one perimeter. Traditional perimeters are more or less not tolerated inside the cluster. This introduces a variety of challenges for network engineers seeking to manage both Kubernetes and non-Kubernetes traffic.

Zoom

Figure 2: Kubernetes clusters do not use VLAN or subnet perimeters within a cluster.

IPAM — IPv4 networks have a limited amount of address space to work with. Some common guidance for creating Kubernetes clusters requires provisioning a /14 (“slash fourteen”) subnet per cluster, or nearly 2% of the entire private address range. It’s no surprise that network engineers whose first interaction with Kubernetes consists of a developer asking them for the largest block of addresses they ever issued have concerns about this practice.

The IPAM problem can be resolved by issuing overlapping addresses across clusters and using source network address translation for outbound traffic. But this solution comes at a cost, as firewalls and network monitoring tools can no longer properly attribute the traffic.

Firewall — Layer 3 networks use IP addresses, ports and protocols to identify the source and destination of traffic flows and apply policy to either allow or deny that traffic. Kubernetes recycles IP addresses continuously, meaning that traffic from a given address may represent one application one minute and another application the next. Policy can be written against the cluster subnet as a whole, but this is almost never the level of granularity that large enterprises need.

IDS/IPS — Intrusion detection and prevention systems monitor not just the source and destination, but the content of network flows to identify and isolate intruders. While Kubernetes alone does not interfere with this process, the common practice of using a service mesh for encryption renders these operations ineffective.

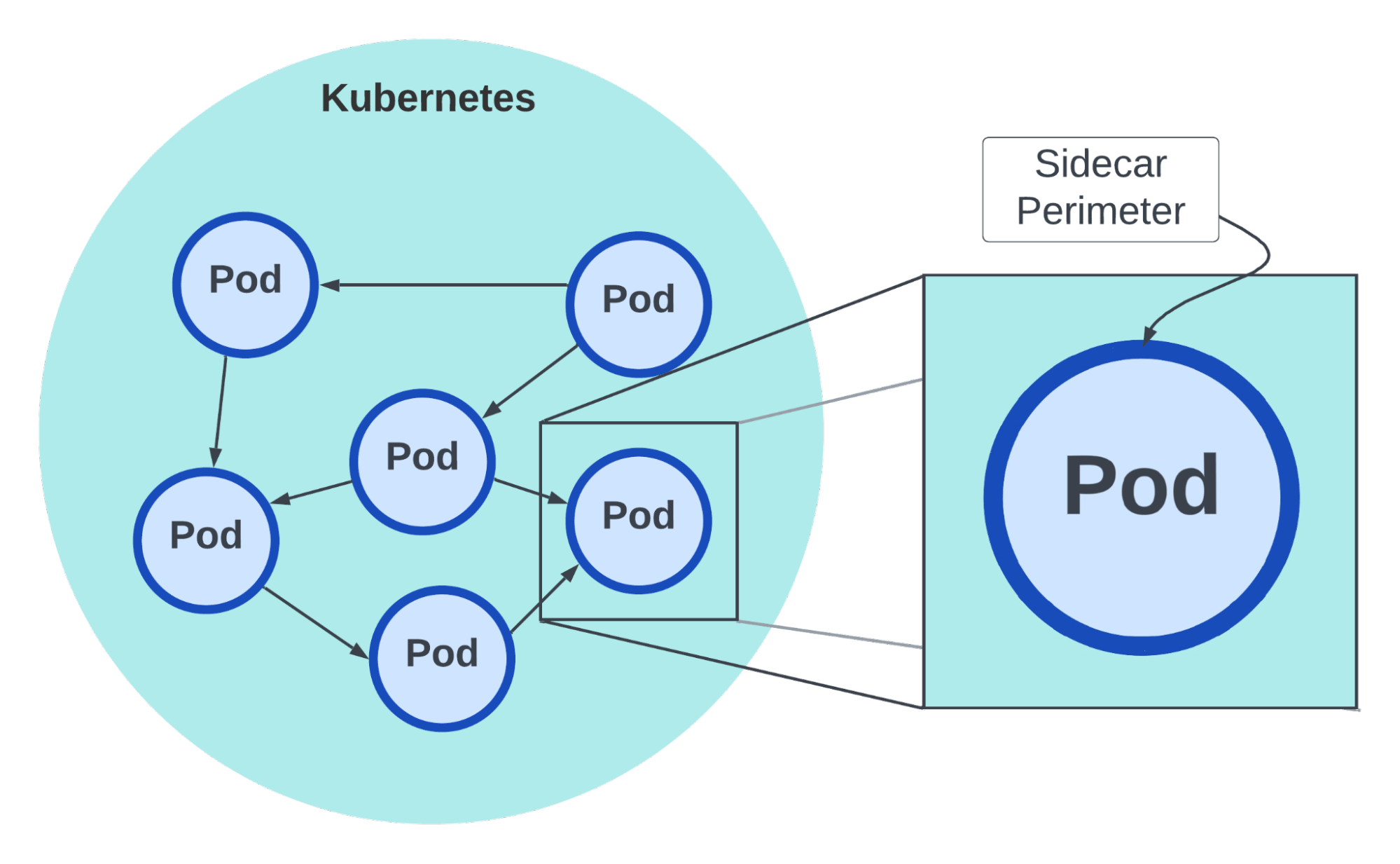

Within Kubernetes, a service mesh can help rebuild the concept of a perimeter model but with each process having its own perimeter and enforcement. So what about applications with dependencies both within Kubernetes and outside? How do we get information outside the black hole? Like Kubernetes, black holes make up just 1% of the mass in the observable universe, so we need a solution that works for both worlds. We need a standard model of application networking.

TRENDING STORIES

Zoom

Figure 3: Service mesh creates a perimeter around every instance or pod.

Mature enterprises seeking to modernize their application stack should insist on a standard model for application networking, one that works as well for hypervisor and bare metal-based workloads as it does for Kubernetes. By creating a single language for network definition and policy, these enterprises will see a substantially improved security posture over companies that attempt to divide their network between containers and VMs, and should experience increased agility for modernization as well.

To learn more about Kubernetes and the cloud native ecosystem, join us at KubeCon + CloudNativeCon Europe in Paris, from March 19-22.